Block AI bots from crawling your Website

AI bots like ChatGPT, Gemini, Perplexity and others are used by companies to collect data from websites for training their AI models. While this can help improve AI systems, you might not want your website’s content used without permission.

In this guide, you’ll learn how to block AI bots from crawling your website — easily and safely.

What Are AI Bots?

AI bots are automated crawlers (like Googlebot or Bingbot) that visit websites to gather data.

However, unlike search engine bots (which index pages to show in search results), AI bots often collect data to train artificial intelligence models.

Common AI Crawlers

Here are a few well-known AI bots you might want to block:

| Bot Name | Company / Purpose |

|---|---|

| GPTBot | Used by OpenAI (ChatGPT, GPT models) |

| CCBot | Used by Common Crawl (large-scale web data collection) |

| Anthropic-ai | Used by Claude AI |

| Google-Extended | Used by Google to collect data for Gemini (AI model) |

| FacebookBot / Meta-ExternalAgent | Used by Meta for AI research |

| Amazonbot | Used by Amazon for AI and data indexing |

Why You Might Want to Block AI Bots

Here are some reasons website owners choose to block them:

- ❌ You don’t want your content used to train AI models

- 🔒 You want to protect your original articles and images

- 💰 You’re running a membership or paid content site

- 📉 You want to control how your content appears online

Blocking AI bots gives you more control over your website’s data.

How to Block AI Bots Using robots.txt

The easiest way to block AI bots is by editing your website’s robots.txt file.

📂 What is robots.txt?

It’s a small text file in your website’s root folder (e.g., yourwebsite.com/robots.txt) that tells bots what they can or cannot access.

Access your robots.txt file & Add the following lines.

User-agent: GPTBot Disallow: / User-agent: ChatGPT-User Disallow: / User-agent: Google-Extended Disallow: / User-agent: Gemini Disallow: / User-agent: PerplexityBot Disallow: / User-agent: ClaudeBot Disallow: / User-agent: AnthropicAI Disallow: / User-agent: CCBot Disallow: / User-agent: XaiCrawler Disallow: / User-agent: Copilot Disallow: / User-agent: Meta-ExternalAgent Disallow: / User-agent: Amazonbot Disallow: / User-agent: Applebot-Extended Disallow: /

If you’re using:

- WordPress – Use an SEO plugin like RankMath, Yoast SEO or All in One SEO to edit robots.txt.

- Blogger – Go to Settings → Crawlers and indexing → Enable custom robots.txt, then paste the code above.

⚠️ Important Notes

Not all bots respect robots.txt — some may still crawl your site.

To fully protect your data, you may need server-level blocks using .htaccess or a firewall rule.

If you’re using an Apache server, you can block bots at the server level.

Add this code to your .htaccess file:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} (GPTBot|ChatGPT|Google-Extended|Gemini|PerplexityBot|ClaudeBot|AnthropicAI|CCBot|XaiCrawler|Copilot) [NC]

RewriteRule .* - [F,L]This denies access to these bots completely — even if they ignore robots.txt.

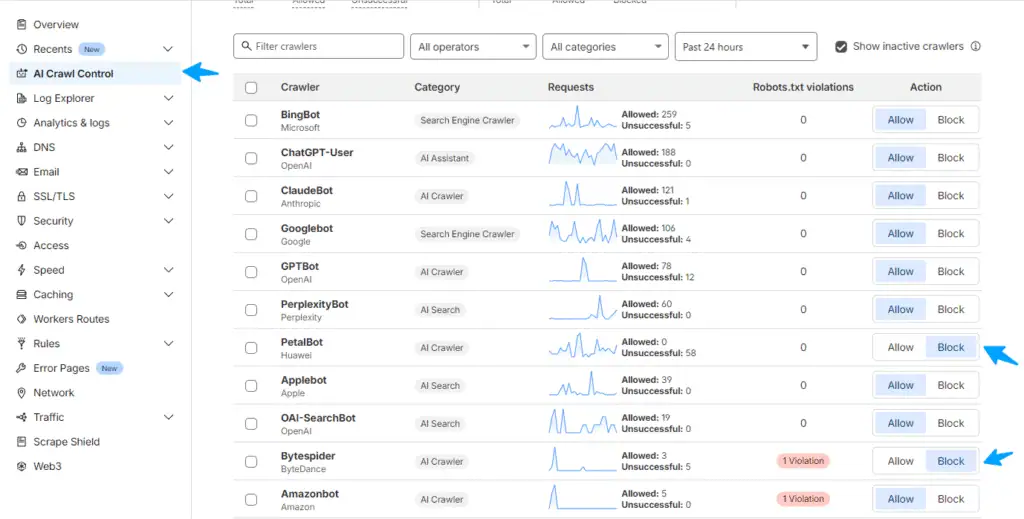

Block AI Bots Using Cloudflare Firewall Rules

You can also block AI Bots using the Cloudflare CDN. You can Go to AI Crawl control and Block the Bots you want. Make sure to allow Search Engine Crawlers as it is important for Indexing your Website.

Final Thoughts

Blocking AI bots is an easy way to protect your website’s content from being used without permission. By updating your robots.txt or .htaccess, you can control which bots can crawl your site.