FREE Indexing Checklist | Step By Step Guide For solving Indexing Issues in Google

Many new website faces differnent types of indexing issues but in search console we don’t get what the problem is. How to solve that indexing issue easily.

To solve this problem, i have given a free indexing checklist. It will help you solve your indexing issues in Google.

You need to learn the basics of indexing, how it works and how you can solve those problems. So, follow the below steps correctly to solve your Indexing issues in Google.

First of all, if you are a WordPress User then I recommend you to use RankMath SEO plugin and set it up properly and if you are a blogger users than you can watch the video to setup the SEO settings in Blogger.

You can also follow the below videos for solving “discovered currently not indexed” issues.

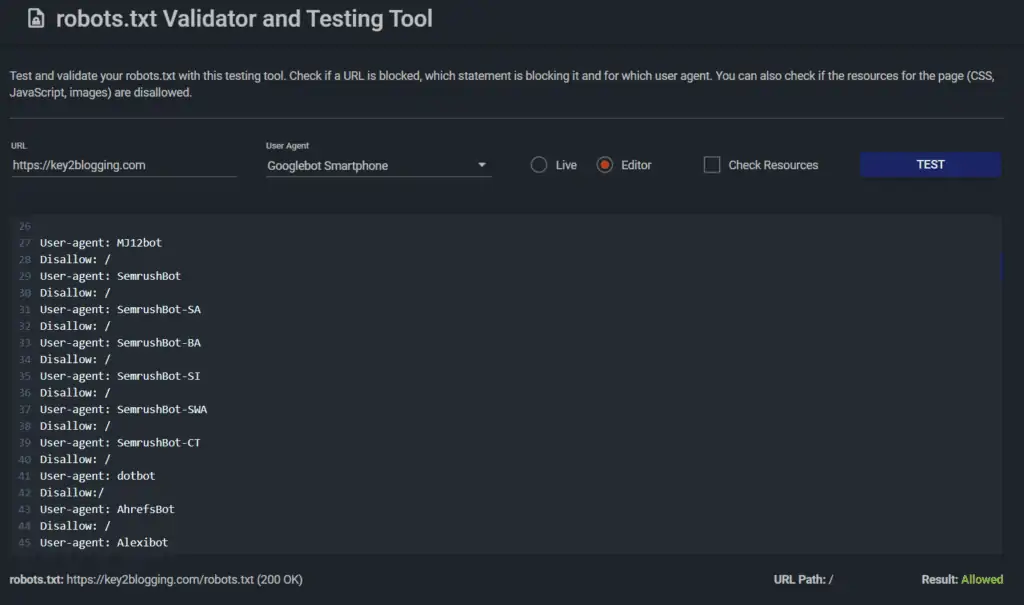

1. Check Disallow in robots.txt

It is one of the most common settings that most people ignore. You have to first check if the page is allowed to crawl by Google bots and Bing bots etc. If you disallow them in robots.txt file than Google will not crawl your website and your webpage will not index in SERPs.

You can use a free tool called “robots.txt Validator and Testing Tool” by TechnicalSEO to check the issue.

Just enter your webpage URL, where you are facing indexing issue and select the user agent to Google bot and click on the test button. After that it will take some time to check the robots.txt file of your website and tell you if the page is blocked or unblocked to Google bot.

Below if you see a result allowed than you can go to the next step. If it is shown not allowed than you have to check your robots.txt rules.

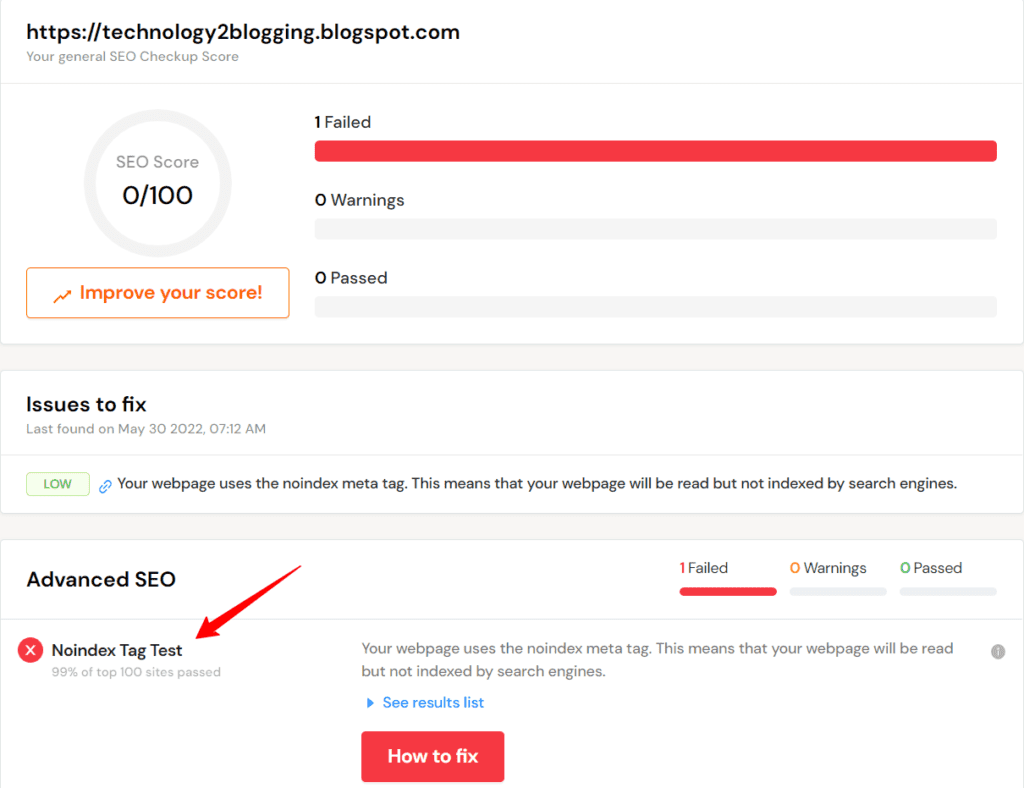

2. Check noindex tag in header

Noindex tag is used to block search engine bots to stop crawling and indexing a page. so, if the tag is present in the header of your webpage than you will face the indexing issue.

As you basically told google not to index the page by adding the noindex tag. so, you have to remove those tags to solve the issue.

<meta name="robots" content="noindex">

<meta name="googlebot" content="noindex">So, you have to find codes like this and if present than you have to remove them from your website.

To check the noindex tag you can use the tool called “Noindex Tag Test” by seositecheckup.

Just enter the webpage URL in this tool and than it will find no index tags on your website. if the noindex tag is present than it will show an warning like this as shown in the below image.

If you are using Blogger site than you can follow the instructions to solve these indexing issues in Blogger.

If you are using WordPress, than you should check the settings of your SEO plugins and remove the noindex settings on those pages.

3. Update your Sitemap

If you are facing indexing issue than you should check your sitemap file and check if the URL is present in the sitemap or not.

You can find out it by pasting your website link in the URL inspection tool of Search console.

Here are most common sitemap links of various sites.

https://example.com/sitemap_index.xml

https://example.com/sitemap.xml

https://example.com/post-sitemap.xml

https://example.com/page-sitemap.xmJust replace the website link and search for your sitemap page. Now you can search for the page by typing ctrl + F on your keyboard.

If you don’t find the page in the sitemap than you have to check your sitemap settings.

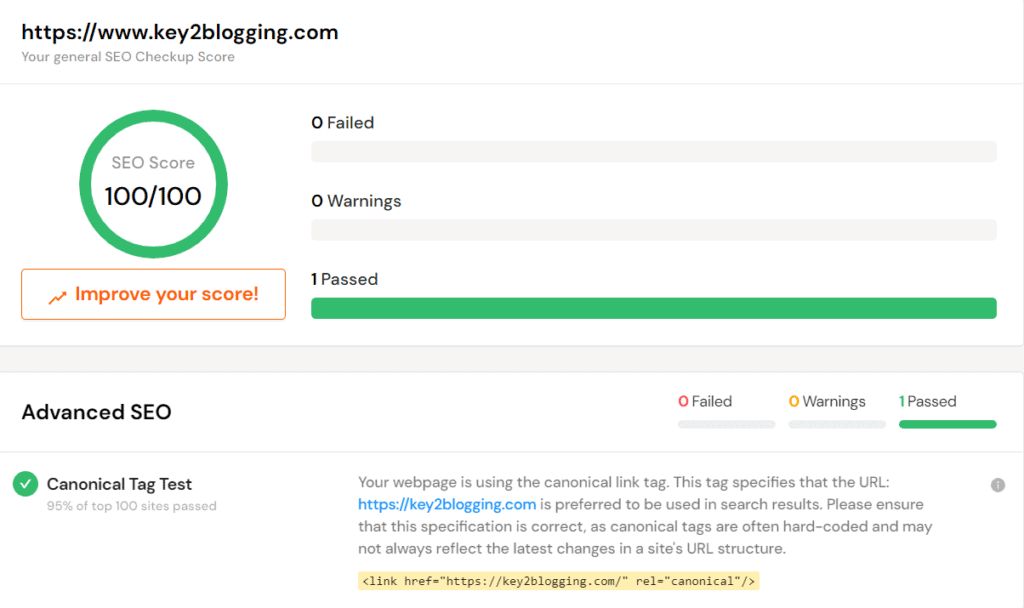

4. Check canonical tags

If you are facing indexing issue than you may face canonical issues. You have to check if there is proper canonical tag used on your website or not.

You can check for canonical issues by pasting the webpage URL in the “URL inspection tool” of Google search console.

Here, in search console it will tell you the canonical URL of that page. According to Google “A canonical URL is the URL of the page that Google thinks is most representative from a set of duplicate pages on your site”.

For example, if your webpage is accessible with http, https, www, etc than Google bot consider these as a separate pages. so, to solve this issue you have to set a canonical URL among them, so Google bot will index those links only and ignore all other similar links.

You can also use “Canonical Tag Test” tool of seositecheckup to check canonical issues of your website.

Here, as you can see I don’t use www in the URL and if someone tries to open it with www, it will automatically redirected to non www URL. In that case, I have implemented the Canonical URL to this.

So, make sure to test the page URL where you are facing indexing issue in Google.

5. Check Crawling Issues

If the Google bot is not able to crawl your website properly than you may face indexing problem on your website.

Crawling issue may occur in various reasons like

- Internal broken links

- Pages with denied access (403 Status code)

- Server errors

- Limited server capacity (slow hosting)

- Wrong pages in sitemap

- Redirect loop

- Slow load speed

- Wrong JavaScript and CSS usage

- Flash content

So, there are lot of factors that affect the crawlability of your website. If you are not posting content regularly than Google may delay the crawling process. Google bot will visit your blog very less hence you will see delay in indexing of new contents.

So, try to post content in regular interval. You can schedule your content on a regular pattern than Google bot will crawl and index your pages very quickly.

You also need to delete unnecessary pages on your sites to improve the crawlability of your website. I recommend you to block the indexing of category and tags pages and only allow the blog post URLs. In this way you can save some crawling budget of your website.

6. Check Internal Link

Internal links is a great way to help Google bot discover your content faster. It also helps bots understand the importance of a content and index the page faster.

So, always link your new pages from an already indexed pages. so the chances of indexing the content faster increases.

Keep the website structure better and try to link all important pages in the homepage, header and footer section of your website.

7. Check your Content Quality

Content quality is very important in indexing of a page in Google. If the page has placeholder text and images, artificially written content, plagiarized content then google bot will not index those pages in search.

so, keep your content unique and original and provide some values to the readers. That’s why most of the new sites face indexing issues due to content quality.

As Google doesn’t have sufficient data about the website and those sites doesn’t have any authority and backlink, it takes longer time for google to analyze the page and index those pages in search results.

So, create your unique content, add internal links, mentioned trusted external sources to prove your points. Also, try to build some backlinks to your website through guest posting and email outreaching.

8. Follow webmaster Guidelines

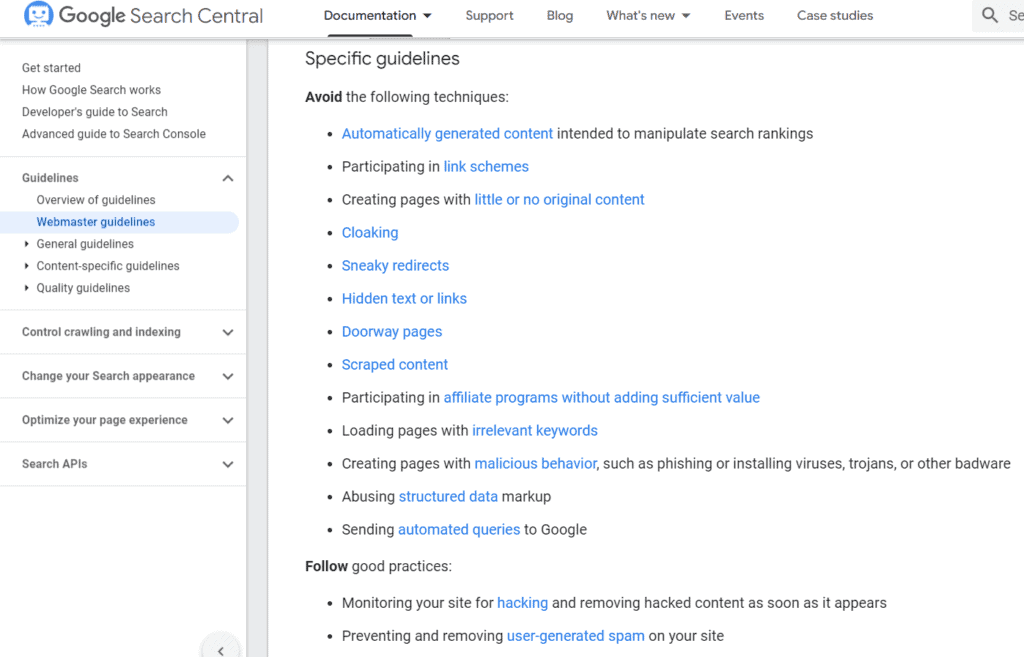

It is better to know the complete webmaster guidelines of Google and follow the guidelines mentioned here.

Read the Quality guidelines and avoid such practices and try to reduce the user generated spam on your sites. Also, prevent your sites from malware attacks.

Conclusion

If you follow this indexing checklist properly than you can easily fix all of your indexing problem in Google. I have prepared this Free indexing checklist to help bloggers know how to solve this problem themselves.

If you still have any doubts than you can ask me in the comment section.

SEO SERVICES BY US

If you want me to do a proper SEO audit of your website, do proper On-page SEO optimization for Better ranking in google than you can contact me here. You can also connect me on social media.